Chinese AI Model Emu3 Handles Text, Image, Video Seamlessly

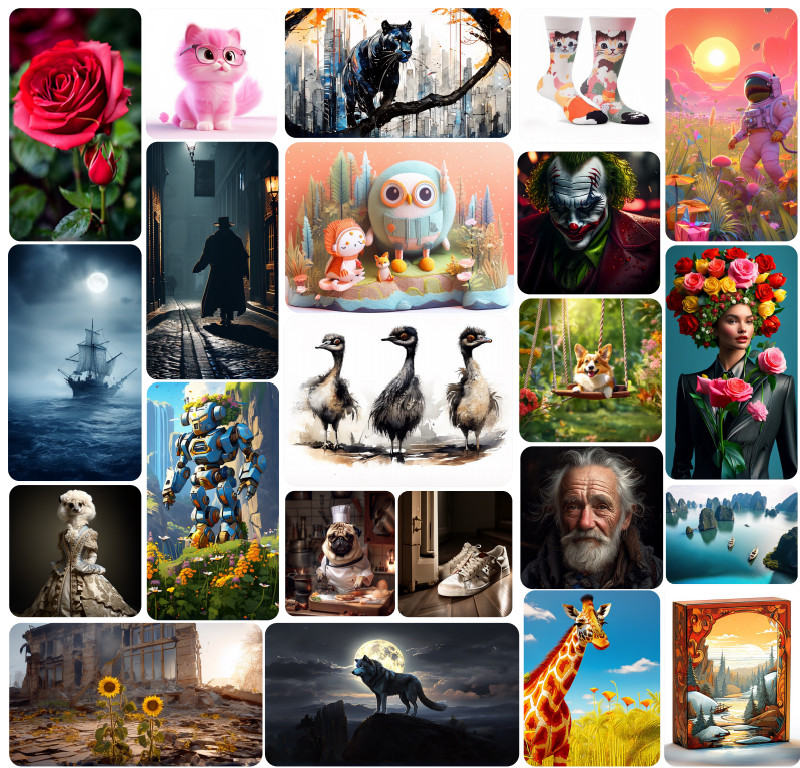

On October 21, the Beijing Academy of Artificial Intelligence (BAAI), a Chinese non-profit organization engaged in AI R&D, released Emu3, a multimodal AI model that seamlessly integrates text, image, and video modalities into a single, unified framework.

The BAAI research team said Emu3 is expected to be used in scenario applications such as robot brains, autonomous driving, multimodal dialogue and inference.

Emu3, based solely on next-token prediction, proves that next-token prediction can be a powerful paradigm for multimodal models.

The existing multimodal AI models are mostly designed for specific tasks. Each has its corresponding architecture and methods. For instance, in the field of video generation, many developers use the diffusion in time (DiT) architecture, as referenced by Sora. Other models such as Stable Diffusion are used for text-to-image synthesis, Sora for text-to-video conversion, and GPT-4V for image-to-text generation.

In contrast to these models, which have a combination of isolated skills rather than an inherently unified ability, Emu3, eliminates the need for diffusion or compositional approaches. By tokenizing images, text, and videos into a discrete space, BAAI has developed a single transformer from scratch.

Emu3 outperforms several well-established task-specific models in both generation and perception tasks, surpassing flagship models such as SDXL and LLaVA.

In September, BAAI open-sourced the key technologies and models of Emu3 including the chat model and generation model after supervised fine-tuning.

Emu3 has been receiving rave reviews from overseas developers. "For researchers, a new opportunity has emerged to explore multimodality through a unified architecture, eliminating the need to combine complex diffusion models with large language models. This approach is akin to the transformative impact of transformers in vision-related tasks," AI consultant Muhammad Umair said on social media platform Meta.

While next-token prediction is considered a promising path towards artificial general intelligence, it struggled to excel in multimodal tasks, which were dominated by diffusion models such as Stable Diffusion and compositional approaches like CLIP combined with large language models.

Raphael Mansuy, co-founder of QuantaLogic, an AI agent platform, thinks that Em3 has significant implications for Al development. Mansuy wrote on X that Em3's success suggests several key insights: Next-token prediction as a viable path to general multimodal Al; potential for simplified and more scalable model architectures; challenge to the dominance of diffusion and compositional approaches.